Try Different Label Smoothing Values And More Epochs

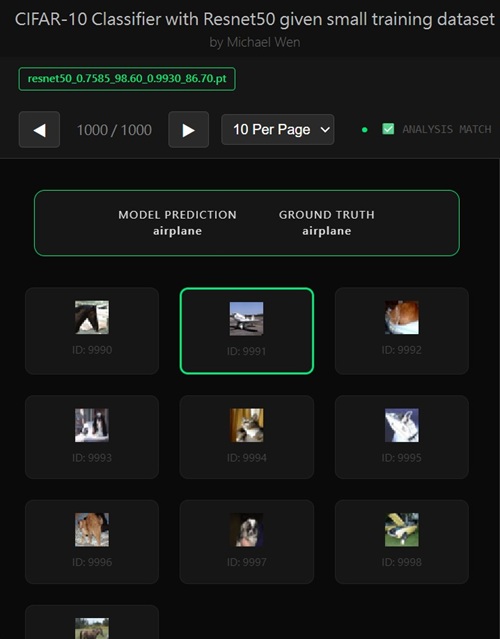

I wrote an app to classify an object using transfer learning, and the model was trained on CIFAR-10 dataset, all by myself.

Label Smoothing Revisited: Strong Gains with Proper Tuning

What Changed

Initial experiments with label smoothing showed only marginal improvement. However, after increasing the smoothing factor fromε = 0.10 to

ε = 0.15 and extending training from 20 to

60 epochs, validation accuracy improved substantially:

– Before: 82.1%

============================== Training model: resnet18 ============================== Epoch [01/20] Train Loss: 1.4383 | Val Loss: 2.1212 | Train Acc: 50.10% | Val Acc: 56.00% Epoch [02/20] Train Loss: 0.4398 | Val Loss: 2.3636 | Train Acc: 85.80% | Val Acc: 59.00% Epoch [03/20] Train Loss: 0.2316 | Val Loss: 1.4054 | Train Acc: 92.50% | Val Acc: 70.50% Epoch [04/20] Train Loss: 0.1048 | Val Loss: 1.1404 | Train Acc: 97.50% | Val Acc: 73.50% Epoch [05/20] Train Loss: 0.0683 | Val Loss: 0.9930 | Train Acc: 98.30% | Val Acc: 75.00% Epoch [06/20] Train Loss: 0.0504 | Val Loss: 0.9246 | Train Acc: 99.00% | Val Acc: 76.00% Epoch [07/20] Train Loss: 0.0265 | Val Loss: 0.8353 | Train Acc: 99.50% | Val Acc: 78.00% Epoch [08/20] Train Loss: 0.0165 | Val Loss: 0.8222 | Train Acc: 99.80% | Val Acc: 78.10% Epoch [09/20] Train Loss: 0.0178 | Val Loss: 0.8403 | Train Acc: 99.70% | Val Acc: 79.40% Epoch [10/20] Train Loss: 0.0163 | Val Loss: 0.7646 | Train Acc: 99.90% | Val Acc: 80.70% Epoch [11/20] Train Loss: 0.0093 | Val Loss: 0.7346 | Train Acc: 99.90% | Val Acc: 80.40% Epoch [12/20] Train Loss: 0.0077 | Val Loss: 0.7318 | Train Acc: 100.00% | Val Acc: 80.50% Epoch [13/20] Train Loss: 0.0044 | Val Loss: 0.7445 | Train Acc: 100.00% | Val Acc: 80.70% Epoch [14/20] Train Loss: 0.0036 | Val Loss: 0.7777 | Train Acc: 100.00% | Val Acc: 79.90% Epoch [15/20] Train Loss: 0.0104 | Val Loss: 0.7921 | Train Acc: 99.80% | Val Acc: 79.60% Epoch [16/20] Train Loss: 0.0032 | Val Loss: 0.7568 | Train Acc: 100.00% | Val Acc: 80.00% Epoch [17/20] Train Loss: 0.0031 | Val Loss: 0.7208 | Train Acc: 100.00% | Val Acc: 80.00% Epoch [18/20] Train Loss: 0.0049 | Val Loss: 0.6775 | Train Acc: 99.90% | Val Acc: 80.80% Epoch [19/20] Train Loss: 0.0033 | Val Loss: 0.6717 | Train Acc: 100.00% | Val Acc: 81.70% Epoch [20/20] Train Loss: 0.0022 | Val Loss: 0.6731 | Train Acc: 100.00% | Val Acc: 82.10% Best results for resnet18: Train Loss: 0.0022 | Val Loss: 0.6731 | Train Acc: 100.00% | Val Acc: 82.10% Training Time: 82.25 seconds

– After: 84.7%

============================== Training model: resnet18 ============================== Epoch [01/60] Train Loss: 1.7027 | Val Loss: 2.4931 | Train Acc: 51.40% | Val Acc: 61.30% Epoch [02/60] Train Loss: 1.0935 | Val Loss: 2.0536 | Train Acc: 87.00% | Val Acc: 70.40% Epoch [03/60] Train Loss: 0.9519 | Val Loss: 1.7454 | Train Acc: 93.60% | Val Acc: 66.40% Epoch [04/60] Train Loss: 0.8665 | Val Loss: 1.4269 | Train Acc: 97.40% | Val Acc: 72.50% Epoch [05/60] Train Loss: 0.8106 | Val Loss: 1.3331 | Train Acc: 99.00% | Val Acc: 75.80% Epoch [06/60] Train Loss: 0.7870 | Val Loss: 1.2334 | Train Acc: 99.40% | Val Acc: 78.70% Epoch [07/60] Train Loss: 0.7732 | Val Loss: 1.2510 | Train Acc: 100.00% | Val Acc: 76.80% Epoch [08/60] Train Loss: 0.7549 | Val Loss: 1.1939 | Train Acc: 100.00% | Val Acc: 79.60% Epoch [09/60] Train Loss: 0.7469 | Val Loss: 1.1935 | Train Acc: 100.00% | Val Acc: 79.80% Epoch [10/60] Train Loss: 0.7382 | Val Loss: 1.1568 | Train Acc: 99.90% | Val Acc: 80.90% Epoch [11/60] Train Loss: 0.7335 | Val Loss: 1.1533 | Train Acc: 100.00% | Val Acc: 81.00% Epoch [12/60] Train Loss: 0.7321 | Val Loss: 1.1363 | Train Acc: 100.00% | Val Acc: 81.40% Epoch [13/60] Train Loss: 0.7251 | Val Loss: 1.1408 | Train Acc: 99.90% | Val Acc: 81.20% Epoch [14/60] Train Loss: 0.7214 | Val Loss: 1.1371 | Train Acc: 100.00% | Val Acc: 81.90% Epoch [15/60] Train Loss: 0.7208 | Val Loss: 1.1296 | Train Acc: 100.00% | Val Acc: 82.90% Epoch [16/60] Train Loss: 0.7210 | Val Loss: 1.1330 | Train Acc: 100.00% | Val Acc: 82.20% Epoch [17/60] Train Loss: 0.7173 | Val Loss: 1.1425 | Train Acc: 100.00% | Val Acc: 81.20% Epoch [18/60] Train Loss: 0.7185 | Val Loss: 1.1356 | Train Acc: 100.00% | Val Acc: 81.70% Epoch [19/60] Train Loss: 0.7134 | Val Loss: 1.1291 | Train Acc: 100.00% | Val Acc: 82.10% Epoch [20/60] Train Loss: 0.7133 | Val Loss: 1.1348 | Train Acc: 100.00% | Val Acc: 81.60% Epoch [21/60] Train Loss: 0.7152 | Val Loss: 1.1386 | Train Acc: 100.00% | Val Acc: 81.60% Epoch [22/60] Train Loss: 0.7149 | Val Loss: 1.1336 | Train Acc: 100.00% | Val Acc: 81.50% Epoch [23/60] Train Loss: 0.7132 | Val Loss: 1.1316 | Train Acc: 100.00% | Val Acc: 81.80% Epoch [24/60] Train Loss: 0.7122 | Val Loss: 1.1234 | Train Acc: 100.00% | Val Acc: 83.00% Epoch [25/60] Train Loss: 0.7116 | Val Loss: 1.1272 | Train Acc: 100.00% | Val Acc: 81.90% Epoch [26/60] Train Loss: 0.7095 | Val Loss: 1.1240 | Train Acc: 100.00% | Val Acc: 82.40% Epoch [27/60] Train Loss: 0.7105 | Val Loss: 1.1233 | Train Acc: 100.00% | Val Acc: 82.50% Epoch [28/60] Train Loss: 0.7110 | Val Loss: 1.1229 | Train Acc: 100.00% | Val Acc: 82.30% Epoch [29/60] Train Loss: 0.7073 | Val Loss: 1.1219 | Train Acc: 100.00% | Val Acc: 83.20% Epoch [30/60] Train Loss: 0.7080 | Val Loss: 1.1227 | Train Acc: 100.00% | Val Acc: 82.30% Epoch [31/60] Train Loss: 0.7066 | Val Loss: 1.1214 | Train Acc: 100.00% | Val Acc: 82.50% Epoch [32/60] Train Loss: 0.7067 | Val Loss: 1.1262 | Train Acc: 100.00% | Val Acc: 82.40% Epoch [33/60] Train Loss: 0.7062 | Val Loss: 1.1200 | Train Acc: 100.00% | Val Acc: 82.90% Epoch [34/60] Train Loss: 0.7069 | Val Loss: 1.1136 | Train Acc: 100.00% | Val Acc: 82.70% Epoch [35/60] Train Loss: 0.7061 | Val Loss: 1.1134 | Train Acc: 100.00% | Val Acc: 83.10% Epoch [36/60] Train Loss: 0.7067 | Val Loss: 1.1050 | Train Acc: 100.00% | Val Acc: 83.10% Epoch [37/60] Train Loss: 0.7069 | Val Loss: 1.1143 | Train Acc: 100.00% | Val Acc: 83.50% Epoch [38/60] Train Loss: 0.7088 | Val Loss: 1.1168 | Train Acc: 100.00% | Val Acc: 83.40% Epoch [39/60] Train Loss: 0.7064 | Val Loss: 1.1266 | Train Acc: 100.00% | Val Acc: 82.90% Epoch [40/60] Train Loss: 0.7067 | Val Loss: 1.1157 | Train Acc: 100.00% | Val Acc: 82.60% Epoch [41/60] Train Loss: 0.7080 | Val Loss: 1.1194 | Train Acc: 100.00% | Val Acc: 82.90% Epoch [42/60] Train Loss: 0.7052 | Val Loss: 1.1122 | Train Acc: 100.00% | Val Acc: 82.60% Epoch [43/60] Train Loss: 0.7062 | Val Loss: 1.1072 | Train Acc: 100.00% | Val Acc: 84.30% Epoch [44/60] Train Loss: 0.7045 | Val Loss: 1.1163 | Train Acc: 100.00% | Val Acc: 83.90% Epoch [45/60] Train Loss: 0.7037 | Val Loss: 1.1094 | Train Acc: 100.00% | Val Acc: 84.70% Epoch [46/60] Train Loss: 0.7048 | Val Loss: 1.1132 | Train Acc: 100.00% | Val Acc: 83.40% Epoch [47/60] Train Loss: 0.7049 | Val Loss: 1.1107 | Train Acc: 100.00% | Val Acc: 81.60% Epoch [48/60] Train Loss: 0.7034 | Val Loss: 1.1145 | Train Acc: 100.00% | Val Acc: 83.40% Epoch [49/60] Train Loss: 0.7042 | Val Loss: 1.1192 | Train Acc: 100.00% | Val Acc: 82.90% Epoch [50/60] Train Loss: 0.7040 | Val Loss: 1.1133 | Train Acc: 100.00% | Val Acc: 83.60% Epoch [51/60] Train Loss: 0.7018 | Val Loss: 1.1110 | Train Acc: 100.00% | Val Acc: 83.70% Epoch [52/60] Train Loss: 0.7017 | Val Loss: 1.1055 | Train Acc: 100.00% | Val Acc: 82.50% Epoch [53/60] Train Loss: 0.7018 | Val Loss: 1.1126 | Train Acc: 100.00% | Val Acc: 83.00% Epoch [54/60] Train Loss: 0.7011 | Val Loss: 1.1032 | Train Acc: 100.00% | Val Acc: 83.30% Epoch [55/60] Train Loss: 0.7022 | Val Loss: 1.1077 | Train Acc: 100.00% | Val Acc: 82.90% Epoch [56/60] Train Loss: 0.7022 | Val Loss: 1.1050 | Train Acc: 100.00% | Val Acc: 83.80% Epoch [57/60] Train Loss: 0.7039 | Val Loss: 1.1075 | Train Acc: 100.00% | Val Acc: 84.10% Epoch [58/60] Train Loss: 0.7024 | Val Loss: 1.1102 | Train Acc: 100.00% | Val Acc: 83.70% Epoch [59/60] Train Loss: 0.7022 | Val Loss: 1.1027 | Train Acc: 100.00% | Val Acc: 82.90% Epoch [60/60] Train Loss: 0.7022 | Val Loss: 1.1059 | Train Acc: 100.00% | Val Acc: 83.60% Best results for resnet18: Train Loss: 0.7037 | Val Loss: 1.1094 | Train Acc: 100.00% | Val Acc: 84.70% Training Time: 241.71 seconds

This confirms that label smoothing can be highly effective when paired with sufficient training time and a well-chosen smoothing strength.

Interpreting the Training Dynamics

From the training log, several important patterns emerge:First, training accuracy reaches

~100% as early as epoch 7, yet

validation accuracy continues to improve steadily over many subsequent epochs.

This is a classic indicator that the model is not merely memorizing labels, but

is continuing to refine its decision boundaries under regularization pressure.Second, despite perfect training accuracy, the training loss remains relatively high (

~0.70) instead of collapsing toward zero. This behavior is

expected—and desirable—when label smoothing is applied. Because the target

distribution is no longer one-hot, the minimum achievable loss is strictly

greater than zero. In other words, the model is prevented from becoming

overconfident by design.Third, validation loss decreases gradually and remains stable across epochs, indicating improved calibration and reduced variance. The absence of sharp validation loss spikes suggests that label smoothing is acting as an effective regularizer rather than destabilizing optimization.

Why Stronger Label Smoothing Helped

Increasing the smoothing factor toε = 0.15 amplified several

beneficial effects:

– It further penalized excessive logit separation, preventing the classifier head from dominating optimization

– It encouraged wider margins and smoother decision boundaries in feature space

– It reduced sensitivity to mislabeled or ambiguous samples, which are common in small or downsampled datasets

With longer training, these effects compound over time. Early epochs establish coarse alignment with the task, while later epochs—under label smoothing—refine class separation without collapsing into brittle, overconfident predictions.

Why This Improvement Is Not Just Noise

Unlike the earlier +0.3% gain, the jump from82.1% to 84.7%

is both larger in magnitude and supported by consistent behavior in the training

curves. Validation accuracy repeatedly reaches the 84%+ range in later

epochs, peaking at epoch 45, rather than appearing as a single outlier spike.This strongly suggests that the performance gain is systematic and attributable to the combined effect of:

– Stronger label smoothing

– Extended training duration

– A stable transfer learning configuration (ResNet-18, partial unfreezing, data augmentation)

Key Takeaway

Label smoothing is not a plug-and-play trick—it interacts deeply with training duration and model capacity. When properly tuned and given sufficient epochs, it can significantly improve generalization performance, even after other regularization techniques are already in place.In this project, label smoothing emerges as a high-impact regularizer, pushing

ResNet-18 validation accuracy to a new best of 84.7%.

Any comments? Feel free to participate below in the Facebook comment section.